The first wave of LLM-enabled apps used large models running in the cloud. It's a running joke that most AI startups are just a wrapper around the OpenAI API. This naturally involves sending your prompt and context data to a third party API.

This typically doesn't matter too much when you're just generating funny elephant pictures. But if you're building consumer or professional apps, you don't want to leak sensitive and private data. Retrieval-augmented generation (RAG) compounds the problem, by feeding even more data into the model — silently, in the background.

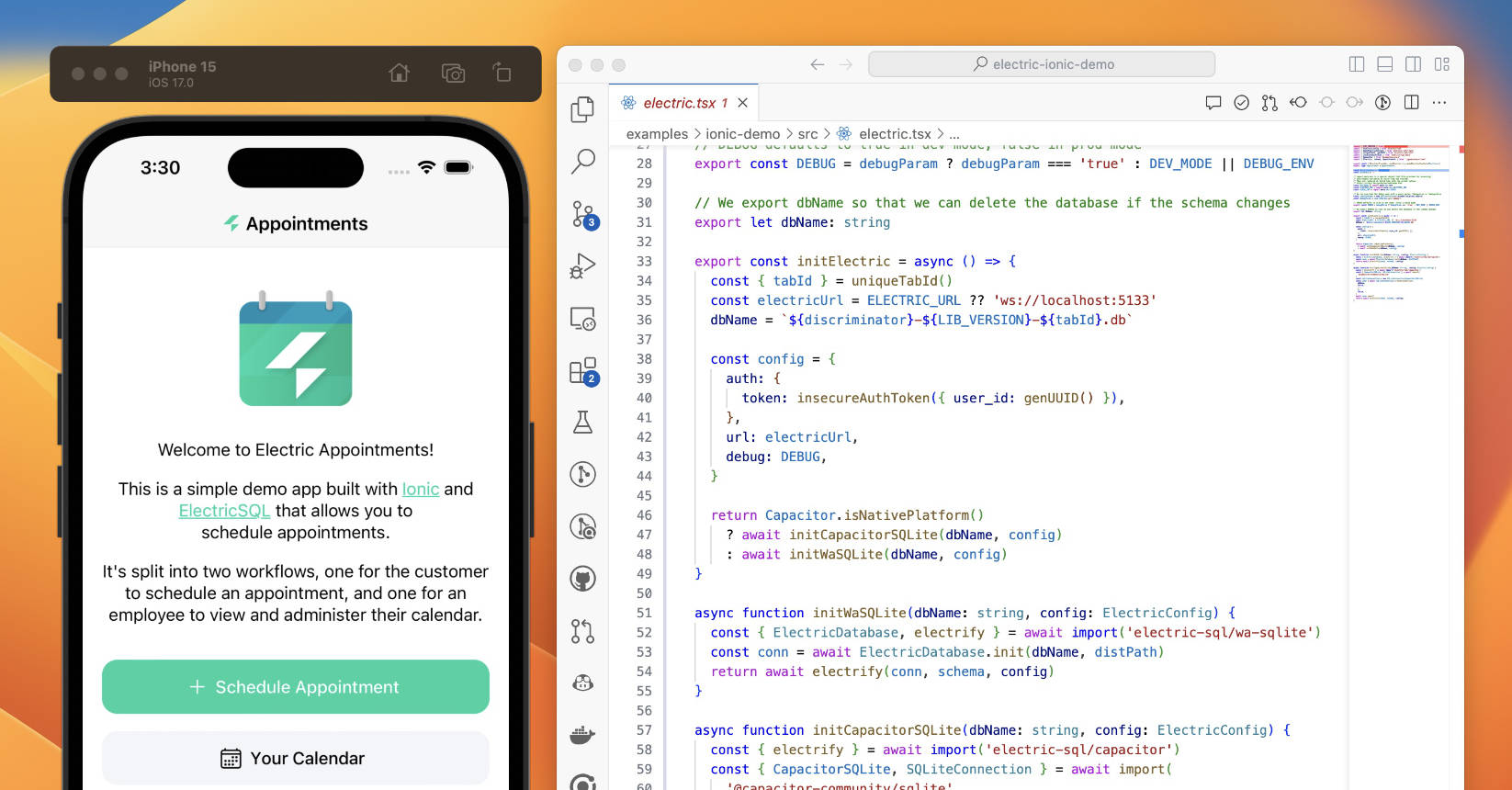

Developers are looking for alternative architectures that can run RAG without leaking private and sensitive data. At ElectricSQL, we're an open source platform for developing local-first software. So we teamed up with the awesome folks at Tauri to dive into the challenge: could we assemble a fully open source stack for running local AI on device with RAG?

This led us on a wild technical journey where we took Postgres, bundled it with pgvector and compiled it to run cross platform inside the Rust backend of a Tauri app. We then compiled llama2 and fastembed into the same Tauri app and built a fully open source, privacy preserving, local AI application running both vector search and RAG.